import pandas as pdJavascript Object Notation(JSON) is a widely used format for storing and exchanging data. Coming from the relational database, it could be difficult to understand NoSQL databases that use JSON to store data and similarly REST API’s response. JSON is also used in storing football event data. It allows easy addition of features in the future.

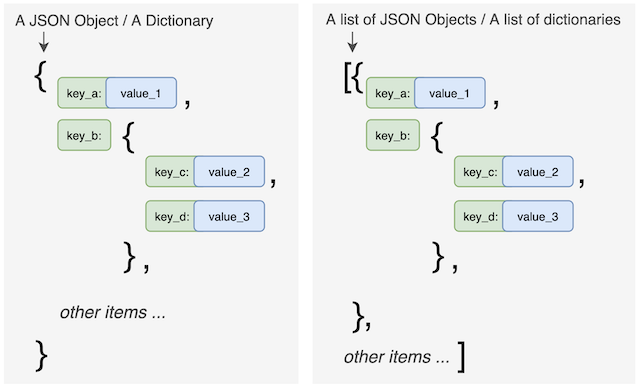

Though JSON format allows for easier exchange of data, for analysis, a tabular form would be appropriate. A JSON structure can be of two forms: a JSON object and list of JSON objects. Since our programming language of choice is Python, those structures can be somewhat called as a dictionary object or list of dicts.

Importing pandas library,

1 Flattening a simple JSON

A dict

Let us consider a simple dictionary: 3 keys and their respective values.

viv = {

"player_id" : 15623,

"player_name" : "Vivianne Miedema",

"jersey_number" : 11}

viv{'player_id': 15623, 'player_name': 'Vivianne Miedema', 'jersey_number': 11}We use the json_normalize API2 to flatten a JSON dict.

df = pd.json_normalize(viv);df| player_id | player_name | jersey_number | |

|---|---|---|---|

| 0 | 15623 | Vivianne Miedema | 11 |

df.info()<class 'pandas.core.frame.DataFrame'>

RangeIndex: 1 entries, 0 to 0

Data columns (total 3 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 player_id 1 non-null int64

1 player_name 1 non-null object

2 jersey_number 1 non-null int64

dtypes: int64(2), object(1)

memory usage: 152.0+ bytesSide Note: If the data contains something that is not compatible with python, in this case a

nullvariable, there are two choices:

- Change

nulltoNone- Pass the data through

json.loadsfunction

Change null to None

null = None

viv1 = { "player_id" : 15623, "player_name" : "Vivianne Miedema", "jersey_number" : 11, "player_nickname" : null}

viv1{'player_id': 15623,

'player_name': 'Vivianne Miedema',

'jersey_number': 11,

'player_nickname': None}Make data as string and pass to json.loads

import json

viv1 = '{ "player_id" : 15623, "player_name" : "Vivianne Miedema", "jersey_number" : 11, "player_nickname" : null}'

viv1 = json.loads(viv1)

viv1{'player_id': 15623,

'player_name': 'Vivianne Miedema',

'jersey_number': 11,

'player_nickname': None}1.1 A list of dicts

player_list = [

{ "player_id" : 15623, "player_name" : "Vivianne Miedema", "jersey_number" : 11, "player_nickname" : null },

{ "player_id" : 10658, "player_name" : "Danielle van de Donk", "jersey_number" : 7, "player_nickname" : null }

]

pd.json_normalize(player_list)| player_id | player_name | jersey_number | player_nickname | |

|---|---|---|---|---|

| 0 | 15623 | Vivianne Miedema | 11 | None |

| 1 | 10658 | Danielle van de Donk | 7 | None |

We have the JSON list of dicts in a tabular form. All the keys become columns and their values as entries.

When we flattern a list with a key-value pair missing for an entry, instead of an error, NaN(not a number) is stored.

player_list = [

{ "player_id" : 15623, "player_name" : "Vivianne Miedema", "jersey_number" : 11, "player_nickname" : null },

{ "player_id" : 10658, "player_name" : "Danielle van de Donk"}

]

pd.json_normalize(player_list)| player_id | player_name | jersey_number | player_nickname | |

|---|---|---|---|---|

| 0 | 15623 | Vivianne Miedema | 11.0 | NaN |

| 1 | 10658 | Danielle van de Donk | NaN | NaN |

Note: See how player_nickname when not specified also turns to NaN from None.

2 Flattening a multi-level JSON

2.1 A simple dict

at_kick0ff = {

"id":"d712fb93-c464-4621-98ba-f2bdcd5641db",

"timestamp":"00:00:00.000",

"duration":0.0,

"lineup":{

"player":{

"id":15623,

"name":"Vivianne Miedema"

},

"position":{

"id":23,

"name":"Center Forward"

},

"jersey_number":11

}

}

at_kick0ff{'id': 'd712fb93-c464-4621-98ba-f2bdcd5641db',

'timestamp': '00:00:00.000',

'duration': 0.0,

'lineup': {'player': {'id': 15623, 'name': 'Vivianne Miedema'},

'position': {'id': 23, 'name': 'Center Forward'},

'jersey_number': 11}}pd.json_normalize(at_kick0ff)| id | timestamp | duration | lineup.player.id | lineup.player.name | lineup.position.id | lineup.position.name | lineup.jersey_number | |

|---|---|---|---|---|---|---|---|---|

| 0 | d712fb93-c464-4621-98ba-f2bdcd5641db | 00:00:00.000 | 0.0 | 15623 | Vivianne Miedema | 23 | Center Forward | 11 |

You can see that lineup dictionary key’s nested key-value pairs have been expanded into individual columns. If you feel that is unnecessary, we can restrict expansion by using max_level argument. With max_level=1, the flattening goes one level deeper.

pd.json_normalize(at_kick0ff, max_level=1)| id | timestamp | duration | lineup.player | lineup.position | lineup.jersey_number | |

|---|---|---|---|---|---|---|

| 0 | d712fb93-c464-4621-98ba-f2bdcd5641db | 00:00:00.000 | 0.0 | {'id': 15623, 'name': 'Vivianne Miedema'} | {'id': 23, 'name': 'Center Forward'} | 11 |

2.2 A list of dicts

first_pass = [

{

"id":"15758edb-58cd-49c4-a817-d2ef48ba3bcf",

"timestamp":"00:00:00.504",

"type":{

"id":30,

"name":"Pass"

},

"play_pattern":{

"id":9,

"name":"From Kick Off"

},

"player":{

"id":15623,

"name":"Vivianne Miedema"

},

"pass":{

"recipient":{

"id":10666,

"name":"Dominique Johanna Anna Bloodworth"

},

"length":25.455845,

"angle":-2.3561945,

"height":{

"id":1,

"name":"Ground Pass"

},

"end_location":[

42.0,

22.0

]

}

}, {

"id" : "ab5674a4-e824-4143-9f6f-3f1645557413",

"timestamp" : "00:00:04.201",

"type" : {

"id" : 30,

"name" : "Pass"

},

"play_pattern" : {

"id" : 9,

"name" : "From Kick Off"

},

"player" : {

"id" : 10666,

"name" : "Dominique Johanna Anna Bloodworth"

},

"location" : [ 45.0, 29.0 ],

"duration" : 1.795201,

"pass" : {

"length" : 51.62364,

"angle" : 0.55038595,

"height" : {

"id" : 3,

"name" : "High Pass"

},

"end_location" : [ 89.0, 56.0 ]

}

}

]

pd.json_normalize(first_pass)| id | timestamp | type.id | type.name | play_pattern.id | play_pattern.name | player.id | player.name | pass.recipient.id | pass.recipient.name | pass.length | pass.angle | pass.height.id | pass.height.name | pass.end_location | location | duration | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 15758edb-58cd-49c4-a817-d2ef48ba3bcf | 00:00:00.504 | 30 | Pass | 9 | From Kick Off | 15623 | Vivianne Miedema | 10666.0 | Dominique Johanna Anna Bloodworth | 25.455845 | -2.356194 | 1 | Ground Pass | [42.0, 22.0] | NaN | NaN |

| 1 | ab5674a4-e824-4143-9f6f-3f1645557413 | 00:00:04.201 | 30 | Pass | 9 | From Kick Off | 10666 | Dominique Johanna Anna Bloodworth | NaN | NaN | 51.623640 | 0.550386 | 3 | High Pass | [89.0, 56.0] | [45.0, 29.0] | 1.795201 |

Limiting the levels…

pd.json_normalize(first_pass, max_level=0)| id | timestamp | type | play_pattern | player | pass | location | duration | |

|---|---|---|---|---|---|---|---|---|

| 0 | 15758edb-58cd-49c4-a817-d2ef48ba3bcf | 00:00:00.504 | {'id': 30, 'name': 'Pass'} | {'id': 9, 'name': 'From Kick Off'} | {'id': 15623, 'name': 'Vivianne Miedema'} | {'recipient': {'id': 10666, 'name': 'Dominique... | NaN | NaN |

| 1 | ab5674a4-e824-4143-9f6f-3f1645557413 | 00:00:04.201 | {'id': 30, 'name': 'Pass'} | {'id': 9, 'name': 'From Kick Off'} | {'id': 10666, 'name': 'Dominique Johanna Anna ... | {'length': 51.62364, 'angle': 0.55038595, 'hei... | [45.0, 29.0] | 1.795201 |

3 Flattening a JSON nested list

3.1 A simple dict

For this case, let us consider a simpler example than of football event data. The key info has list of dictionaries inside its structure. We call it nested dict.

awfc = {

'team': 'AWFC',

'location': 'London',

'ranking': 1,

'info': {

'manager': 'Joe',

'contacts': {

'email': {

'coaching': 'joe@afc.com',

'general': 'info@afc.com'

},

'tel': '123456789',

}

},

'players': [

{ 'name': 'Viv' },

{ 'name': 'DvD' },

{ 'name': 'Kim' }

],

};awfc{'team': 'AWFC',

'location': 'London',

'ranking': 1,

'info': {'manager': 'Joe',

'contacts': {'email': {'coaching': 'joe@afc.com', 'general': 'info@afc.com'},

'tel': '123456789'}},

'players': [{'name': 'Viv'}, {'name': 'DvD'}, {'name': 'Kim'}]}The players column has a list of dicts. So, we can flatten that column using record_path argument.

pd.json_normalize(awfc, record_path=['players'])| name | |

|---|---|

| 0 | Viv |

| 1 | DvD |

| 2 | Kim |

But, making a separate table with no reference id has no meaning. To prevent that we can append revelant columns to the new table using meta argument. Here we want their team and Telephone number. The tel key lies within info->contacts->tel. So, we need provide that path like so ['info', 'contacts', 'tel'].

pd.json_normalize(awfc, record_path=['players'], meta=['team',['info', 'contacts', 'tel']])| name | team | info.contacts.tel | |

|---|---|---|---|

| 0 | Viv | AWFC | 123456789 |

| 1 | DvD | AWFC | 123456789 |

| 2 | Kim | AWFC | 123456789 |

The order in which those paths are mentioned, the order in which those columns are appended.

pd.json_normalize(awfc, record_path=['players'], meta=['team',['info', 'contacts', 'tel'],['info', 'manager']])| name | team | info.contacts.tel | info.manager | |

|---|---|---|---|---|

| 0 | Viv | AWFC | 123456789 | Joe |

| 1 | DvD | AWFC | 123456789 | Joe |

| 2 | Kim | AWFC | 123456789 | Joe |

3.2 A list of dicts

json_list = [

{

'team': 'arsenal',

'colour': 'red-white',

'info': {

'staff': {

'physio': 'xxxx',

'doctor': 'yyyy'

}

},

'players': [

{

'name': 'Viv',

'sex': 'F',

'stats': { 'goals': 101, 'assists': 40 }

},

{

'name': 'Beth',

'sex': 'F',

'stats': { 'goals': 60, 'assists': 25 }

},

]

},

{

'team': 'city',

'colour': 'blue',

'info': {

'staff': {

'physio': 'aaaa',

'doctor': 'bbbb'

}

},

'players': [

{ 'name': 'Steph', 'sex': 'F' },

{ 'name': 'Lucy', 'sex': 'F' },

]

},

]

pd.json_normalize(json_list)| team | colour | players | info.staff.physio | info.staff.doctor | |

|---|---|---|---|---|---|

| 0 | arsenal | red-white | [{'name': 'Viv', 'sex': 'F', 'stats': {'goals'... | xxxx | yyyy |

| 1 | city | blue | [{'name': 'Steph', 'sex': 'F'}, {'name': 'Lucy... | aaaa | bbbb |

pd.json_normalize(json_list, record_path =['players'])| name | sex | stats.goals | stats.assists | |

|---|---|---|---|---|

| 0 | Viv | F | 101.0 | 40.0 |

| 1 | Beth | F | 60.0 | 25.0 |

| 2 | Steph | F | NaN | NaN |

| 3 | Lucy | F | NaN | NaN |

How about we now append the players’ team, colour, and their physio.

pd.json_normalize(

json_list,

record_path =['players'],

meta=['team', 'colour', ['info', 'staff', 'physio']]

)| name | sex | stats.goals | stats.assists | team | colour | info.staff.physio | |

|---|---|---|---|---|---|---|---|

| 0 | Viv | F | 101.0 | 40.0 | arsenal | red-white | xxxx |

| 1 | Beth | F | 60.0 | 25.0 | arsenal | red-white | xxxx |

| 2 | Steph | F | NaN | NaN | city | blue | aaaa |

| 3 | Lucy | F | NaN | NaN | city | blue | aaaa |

4 Ignoring key errors

json_list = [

{

'team': 'arsenal',

'colour': 'red-white',

'info': {

'staff': {

'physio': 'xxxx',

'doctor': 'yyyy'

}

},

'players': [

{

'name': 'Viv',

'sex': 'F',

'stats': { 'goals': 101, 'assists': 40 }

},

{

'name': 'Beth',

'sex': 'F',

'stats': { 'goals': 60, 'assists': 25 }

},

]

},

{

'team': 'city',

'colour': 'blue',

'info': {

'staff': {

'doctor': 'bbbb'

}

},

'players': [

{ 'name': 'Steph', 'sex': 'F' },

{ 'name': 'Lucy', 'sex': 'F' },

]

},

]Notice that the key physio is missing from the entry team=city. What happens if we try to access physio key inside meta?

pd.json_normalize(

json_list,

record_path =['players'],

meta=['team', 'colour', ['info', 'staff', 'physio']],

)KeyError: "Key 'physio' not found. To replace missing values of 'physio' with np.nan, pass in errors='ignore'"How come stats.goals and stats.assists didn’t generate an error but that above does? Because, the meta argument expects values to be present for listed keys in meta by default. We can ignore those errors(as suggested) using errors='ignore'

pd.json_normalize(

json_list,

record_path =['players'],

meta=['team', 'colour', ['info', 'staff', 'physio']],

errors='ignore'

)| name | sex | stats.goals | stats.assists | team | colour | info.staff.physio | |

|---|---|---|---|---|---|---|---|

| 0 | Viv | F | 101.0 | 40.0 | arsenal | red-white | xxxx |

| 1 | Beth | F | 60.0 | 25.0 | arsenal | red-white | xxxx |

| 2 | Steph | F | NaN | NaN | city | blue | NaN |

| 3 | Lucy | F | NaN | NaN | city | blue | NaN |

5 Custom separator sep

We notice that by default pandas uses . to indicate the direction of the path. We can change that using the sep argument.

Tip: Usually an

underscoreis used instead of.

json_list = [

{

'team': 'arsenal',

'colour': 'red-white',

'info': {

'staff': {

'physio': 'xxxx',

'doctor': 'yyyy'

}

},

'players': [

{

'name': 'Viv',

'sex': 'F',

'stats': { 'goals': 101, 'assists': 40 }

},

{

'name': 'Beth',

'sex': 'F',

'stats': { 'goals': 60, 'assists': 25 }

},

]

},

{

'team': 'city',

'colour': 'blue',

'info': {

'staff': {

'physio': 'aaaa',

'doctor': 'bbbb'

}

},

'players': [

{ 'name': 'Steph', 'sex': 'F' },

{ 'name': 'Lucy', 'sex': 'F' },

]

},

]pd.json_normalize(

json_list,

record_path =['players'],

meta=['team', 'colour', ['info', 'staff', 'physio']],

sep='->'

)| name | sex | stats->goals | stats->assists | team | colour | info->staff->physio | |

|---|---|---|---|---|---|---|---|

| 0 | Viv | F | 101.0 | 40.0 | arsenal | red-white | xxxx |

| 1 | Beth | F | 60.0 | 25.0 | arsenal | red-white | xxxx |

| 2 | Steph | F | NaN | NaN | city | blue | aaaa |

| 3 | Lucy | F | NaN | NaN | city | blue | aaaa |

6 Adding context to record and meta data using record_prefix and meta_prefix

pd.json_normalize(

json_list,

record_path=['players'],

meta=['team', 'colour', ['info', 'staff', 'physio']],

meta_prefix='meta-',

record_prefix='player-',

sep='->'

)| player-name | player-sex | player-stats->goals | player-stats->assists | meta-team | meta-colour | meta-info->staff->physio | |

|---|---|---|---|---|---|---|---|

| 0 | Viv | F | 101.0 | 40.0 | arsenal | red-white | xxxx |

| 1 | Beth | F | 60.0 | 25.0 | arsenal | red-white | xxxx |

| 2 | Steph | F | NaN | NaN | city | blue | aaaa |

| 3 | Lucy | F | NaN | NaN | city | blue | aaaa |

7 Working with a local file

In most scenarios, we won’t be making new JSON object ourselves instead use JSON formatted files. We make use python’s json module and read the file, then use pandas’ json_normalize to flatten it into a dataframe.

import json

# load data using Python JSON module

with open('movies.json') as f:

data = json.load(f)

# Normalizing data

pd.json_normalize(data)| Title | US Gross | Worldwide Gross | US DVD Sales | Production Budget | Release Date | MPAA Rating | Running Time min | Distributor | Source | Major Genre | Creative Type | Director | Rotten Tomatoes Rating | IMDB Rating | IMDB Votes | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | The Land Girls | 146083 | 146083 | NaN | 8000000 | Jun 12 1998 | R | NaN | Gramercy | None | None | None | None | NaN | 6.1 | 1071.0 |

| 1 | First Love, Last Rites | 10876 | 10876 | NaN | 300000 | Aug 07 1998 | R | NaN | Strand | None | Drama | None | None | NaN | 6.9 | 207.0 |

| 2 | I Married a Strange Person | 203134 | 203134 | NaN | 250000 | Aug 28 1998 | None | NaN | Lionsgate | None | Comedy | None | None | NaN | 6.8 | 865.0 |

| 3 | Four Rooms | 4301000 | 4301000 | NaN | 4000000 | Dec 25 1995 | R | NaN | Miramax | Original Screenplay | Comedy | Contemporary Fiction | Robert Rodriguez | 14.0 | 6.4 | 34328.0 |

| 4 | The Four Seasons | 42488161 | 42488161 | NaN | 6500000 | May 22 1981 | None | NaN | Universal | Original Screenplay | Comedy | Contemporary Fiction | Alan Alda | 71.0 | 7.0 | 1814.0 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 63 | Big Things | 0 | 0 | NaN | 50000 | Dec 31 2009 | None | NaN | None | None | None | None | None | NaN | NaN | NaN |

| 64 | Bogus | 4357406 | 4357406 | NaN | 32000000 | Sep 06 1996 | PG | NaN | Warner Bros. | Original Screenplay | Comedy | Fantasy | Norman Jewison | 40.0 | 4.8 | 2742.0 |

| 65 | Beverly Hills Cop | 234760478 | 316300000 | NaN | 15000000 | Dec 05 1984 | None | NaN | Paramount Pictures | Original Screenplay | Action | Contemporary Fiction | Martin Brest | 83.0 | 7.3 | 45065.0 |

| 66 | Beverly Hills Cop II | 153665036 | 276665036 | NaN | 20000000 | May 20 1987 | R | NaN | Paramount Pictures | Original Screenplay | Action | Contemporary Fiction | Tony Scott | 46.0 | 6.1 | 29712.0 |

| 67 | Beverly Hills Cop III | 42586861 | 119180938 | NaN | 50000000 | May 25 1994 | R | NaN | Paramount Pictures | Original Screenplay | Action | Contemporary Fiction | John Landis | 10.0 | 5.0 | 21199.0 |

68 rows × 16 columns

8 Working with URL

Reading a JSON file from an url needs an extra module in requests as any data from the Internet carries overheads that are necessary for efficient exchange of information(REST API). So, in order to read the file contents, we call upon requests’ text attribute which fetches the contents of the file.

Here, we use json.loads and not json.load as loads function expects contents(string) rather than a file pointer. If looked closely into the json module, the load calls loads using read() on the file.

import requests

URL = 'https://vega.github.io/vega-datasets/data/cars.json'

data = json.loads(requests.get(URL).text)

pd.json_normalize(data)| Name | Miles_per_Gallon | Cylinders | Displacement | Horsepower | Weight_in_lbs | Acceleration | Year | Origin | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | chevrolet chevelle malibu | 18.0 | 8 | 307.0 | 130.0 | 3504 | 12.0 | 1970-01-01 | USA |

| 1 | buick skylark 320 | 15.0 | 8 | 350.0 | 165.0 | 3693 | 11.5 | 1970-01-01 | USA |

| 2 | plymouth satellite | 18.0 | 8 | 318.0 | 150.0 | 3436 | 11.0 | 1970-01-01 | USA |

| 3 | amc rebel sst | 16.0 | 8 | 304.0 | 150.0 | 3433 | 12.0 | 1970-01-01 | USA |

| 4 | ford torino | 17.0 | 8 | 302.0 | 140.0 | 3449 | 10.5 | 1970-01-01 | USA |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 401 | ford mustang gl | 27.0 | 4 | 140.0 | 86.0 | 2790 | 15.6 | 1982-01-01 | USA |

| 402 | vw pickup | 44.0 | 4 | 97.0 | 52.0 | 2130 | 24.6 | 1982-01-01 | Europe |

| 403 | dodge rampage | 32.0 | 4 | 135.0 | 84.0 | 2295 | 11.6 | 1982-01-01 | USA |

| 404 | ford ranger | 28.0 | 4 | 120.0 | 79.0 | 2625 | 18.6 | 1982-01-01 | USA |

| 405 | chevy s-10 | 31.0 | 4 | 119.0 | 82.0 | 2720 | 19.4 | 1982-01-01 | USA |

406 rows × 9 columns

9 Conclusion

We saw the use of json_normalize function in pandas library. It helps take a JSON data, flatten it, and make it as a dataframe for easier analysis.